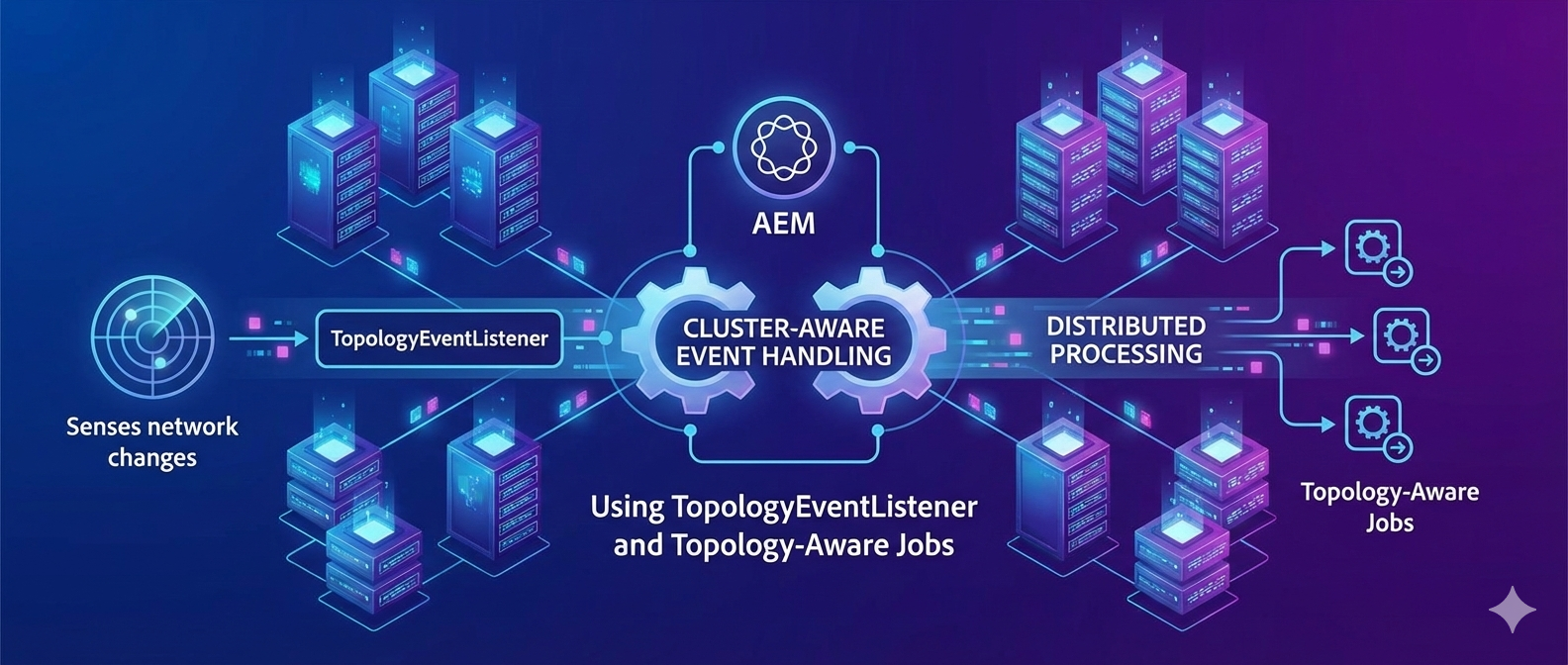

Cluster-aware event handling in AEM: using TopologyEventListener and topology-aware jobs

Why “cluster‑aware” matters in AEM (especially Cloud Service)

On AEM as a Cloud Service, code always runs in a cluster, instances are ephemeral, and leader election can be in progress when events fire. Your background work must tolerate restarts and resume reliably. If you need a single “primary” to act, use Apache Sling Discovery to identify the leader. [Reference link]

Apache Sling Discovery models your deployment’s topology (instances ↔ clusters). Key types:

TopologyView: snapshot of the current topology; exposesgetLocalInstance()andisCurrent(). [sling.apache.org]InstanceDescription: per‑instance descriptor withisLeader()andisLocal()flags. [sling.apache.org]ClusterView: the set of instances with one stable leader at a time. [developer.adobe.com]

You obtain the current view via DiscoveryService#getTopology(). [sling.apache.org]

The legacy Granite/JCR

ClusterAwareinterface is deprecated—replace it withTopologyEventListener. [developer.adobe.com]

Quick recap: event options you covered previously

- OSGi

EventHandler(Sling/OSGi topics): Use for system‑level signals (replication, jobs, resource events). [stacknowledge.in] - JCR

EventListener(repository observation): Low‑level, heavy; not cluster‑safe by default; often replaced by Sling resource observation. [stacknowledge.in] - Sling

ResourceChangeListener: Higher‑level, modern listener for resource changes (preferred over JCR observation in clustered setups). [stacknowledge.in] - Sling Jobs (

JobManager+JobConsumer): Reliable “at least once” background processing, persisted under/var/eventing/jobsand distributed across the topology. [stacknowledge.in]

TopologyEventListener: reacting to cluster changes

Implement TopologyEventListener to be notified when the topology changes (initialization, changing, changed, properties changed). Keep handlers fast and non‑blocking. [sling.apache.org], [apache.goo…source.com]

Minimal example

package com.example.core.cluster;

import org.apache.sling.discovery.TopologyEvent;

import org.apache.sling.discovery.TopologyEventListener;

import org.osgi.service.component.annotations.Component;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@Component(service = TopologyEventListener.class, immediate = true)

public class ClusterTopologyObserver implements TopologyEventListener {

private static final Logger LOG = LoggerFactory.getLogger(ClusterTopologyObserver.class);

@Override

public void handleTopologyEvent(final TopologyEvent event) {

switch (event.getType()) {

case TOPOLOGY_INIT:

LOG.info("Topology initialized: {}", event);

// Warm up caches, start leader-only schedulers (see below)

break;

case TOPOLOGY_CHANGING:

LOG.info("Topology changing: {}", event);

// Pause work that assumes a stable leader

break;

case TOPOLOGY_CHANGED:

LOG.info("Topology changed: {}", event);

// Re-check leader; reconfigure queues/schedulers

break;

case PROPERTIES_CHANGED:

LOG.info("Topology properties changed: {}", event);

break;

default:

LOG.debug("Unhandled topology event: {}", event);

}

}

}The event types above are defined by the Discovery API; the listener must return quickly and avoid locks.

Leader‑only execution: gate work with Discovery API

When only one node should perform a task (e.g., a job scheduler, deduped producer), gate it with isLeader() from the current topology view:

package com.example.core.cluster;

import org.apache.sling.discovery.DiscoveryService;

import org.apache.sling.discovery.TopologyView;

import org.osgi.service.component.annotations.Component;

import org.osgi.service.component.annotations.Reference;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@Component(service = LeaderGate.class)

public class LeaderGate {

private static final Logger LOG = LoggerFactory.getLogger(LeaderGate.class);

@Reference

private DiscoveryService discoveryService; // Obtain current topology view

public boolean isLeaderNow() {

// TopologyView is synchronized with TopologyEventListener callbacks

final TopologyView view = discoveryService.getTopology();

boolean leader = view.getLocalInstance().isLeader();

LOG.debug("Leader check: isLeader={} (isCurrent={})", leader, view.isCurrent());

return leader;

}

}DiscoveryService#getTopology()returns the last discovered view (never null), blocking if a topology event is being delivered to keep state consistent. [sling.apache.org]TopologyView#getLocalInstance().isLeader()tells you if the local instance is the cluster leader. [sling.apache.org], [sling.apache.org]

In AEM as a Cloud Service, designing for leader‑only work aligns with the platform’s guidance to make code cluster‑aware and resilient. [experience….adobe.com]

Reliable background work with Sling Jobs (topology‑aware by design)

Sling Jobs provide persistent, at‑least‑once processing, with storage under /var/eventing/jobs and assignment per instance subtree to avoid concurrent processing across nodes. Only one instance processes a given job at a time; if a consumer fails, the framework retries.

Producer & consumer

// Producer

package com.example.core.jobs;

import java.util.HashMap;

import java.util.Map;

import org.apache.sling.event.jobs.JobManager;

import org.osgi.service.component.annotations.Component;

import org.osgi.service.component.annotations.Reference;

@Component(immediate = true)

public class ReportJobCreator {

public static final String TOPIC = "com/example/jobs/report";

@Reference

private JobManager jobManager;

public void enqueueReport(String reportId) {

Map<String, Object> props = new HashMap<>();

props.put("reportId", reportId);

jobManager.addJob(TOPIC, props);

}

}// Consumer

package com.example.core.jobs;

import org.apache.sling.event.jobs.Job;

import org.apache.sling.event.jobs.consumer.JobConsumer;

import org.osgi.service.component.annotations.Component;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

@Component(

service = JobConsumer.class,

property = { JobConsumer.PROPERTY_TOPICS + "=" + ReportJobCreator.TOPIC },

immediate = true

)

public class ReportJobConsumer implements JobConsumer {

private static final Logger LOG = LoggerFactory.getLogger(ReportJobConsumer.class);

@Override

public JobResult process(final Job job) {

try {

String id = (String) job.getProperty("reportId");

// Do the work (idempotent!)

LOG.info("Generating report {}", id);

return JobResult.OK;

} catch (Exception e) {

LOG.error("Job failed", e);

return JobResult.FAILED; // Will be retried

}

}

}- At least once execution; design the consumer to be idempotent.

- Jobs are persisted and assigned under topology‑aware subtrees (e.g.,

/var/eventing/jobs/assigned/...), which prevents two instances from processing the same job. - Use

JobManagerAPIs (addJob,createJob) and queue configs to tune concurrency/order.

For schedulers that should fire once per cluster, set

scheduler.runOn=LEADER, a recommended pattern in clustered AEM.

Combining OSGi EventHandler with cluster awareness

Replication and distribution events often arrive on every pod. If your handler must act exactly once (e.g., notify an external system), use either:

- Leader gating inside the handler:

@Component(service = EventHandler.class, immediate = true,

property = { EventConstants.EVENT_TOPIC + "=" + com.day.cq.replication.ReplicationAction.EVENT_TOPIC })

public class ReplicationEventHandler implements EventHandler {

@Reference private LeaderGate leaderGate;

@Override

public void handleEvent(final Event event) {

if (!leaderGate.isLeaderNow()) { return; }

// Safe: execute once per topology

}

}2. Subscribe to distribution completion topics that reflect successful dissemination, reducing duplicates

Modern alternative to JCR observation: ResourceChangeListener

Prefer ResourceChangeListener for content changes; it avoids long‑lived sessions and better fits clustered AEM. For local‑only vs distributed events, choose the appropriate listener type and design to avoid duplicate work. [experience….adobe.com]

Patterns you can use

1) Leader‑only producers, distributed consumers

- Only the leader enqueues jobs (e.g., a nightly batch).

- Any node may consume, but the framework ensures one instance processes each job.

2) Topology‑change hooks for resilience

- On

TOPOLOGY_CHANGING, pause cache flushers or producers. - On

TOPOLOGY_CHANGED, refresh leader state and resume.

3) Leader‑only schedulers

- Configure Sling Commons Scheduler with

scheduler.runOn=LEADERso a recurring task fires once per cluster.

4) Dedup in event handlers

- Handlers can trigger twice in Cloud Service; guard with leader checks or switch to distribution completion topics.

Migration notes: from ClusterAware to Discovery/Topology events

If you have older code on com.day.cq.jcrclustersupport.ClusterAware, replace it with:

TopologyEventListenerfor reacting to cluster changes, and- DiscoveryService +

isLeader()for leader selection.

Operational tips

- Event visibility: OSGi events & job stats are accessible via Sling/AEM consoles; monitor job queues and

/var/eventing/jobs. - Design for retries: Sling Jobs retry on failure; ensure idempotency and checkpointing.

- Cloud Service guidelines: Persist state, avoid local filesystem, plan for instance replacement at any time.

Post Comment